How many users does Twitter have?

Here’s a short summary of a failed experiment using the Principle of Inclusion/Exclusion to estimate how many users Twitter has. I.e., there’s no answer below, just the outline of some quick coding.

I was wondering about this over cereal this morning. I know some folks at Twitter, and I know some folks who have access to the full tweet database, so I could perhaps get that answer just by asking. But that wouldn’t be any fun, and I probably couldn’t blog about it.

I was at FOWA last week and it seemed that absolutely everyone was on Twitter. Plus, they were active users, not people who’d created an account and didn’t use it. If Twitter’s usage pattern looks anything like a Power Law as we might expect, there will be many, many inactive or dormant accounts for every one that’s moderately active.

BTW, I’m terrycojones on Twitter. Follow me please, I’m trying to catch Jason Calacanis.

You could have a crack at answering the question by looking at Twitter user id numbers via the API and trying to estimate how many users there are. I did play with that at one point at least with tweet ids, but although they increase there are large holes in the tweet id space. And approaches like that have to go through the Twitter API, which limits you to a mere 70 requests per hour – not enough for any serious (and quick) probing.

In any case, I was looking at the Twitter Find People page. Go to the Search tab and you can search for users.

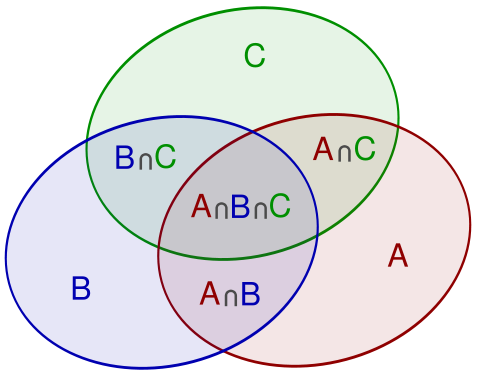

I searched for the single letter A, and got around 109K hits. That lead me to think that I could get a bound on Twitter’s size using the Principle of Inclusion/Exclusion (PIE). (If you don’t know what that is, don’t be intimidated by the math – it’s actually very simple, just consider the cases of counting the size of the union of 2 and 3 sets). The PIE is a beautiful and extremely useful tool in combinatorics and probability theory (some nice examples can be found in Chapter 3 of the introductory text Applied Combinatorics With Problem Solving). The image above comes from the Wikipedia page.

To get an idea of how many Twitter users there are, we can add the number of people with an A in their name to the number with a B in their name, …., to the number with a Z in their name.

That will give us an over-estimate though, as names typically have many letters in them. So we’ll be counting users multiple times in this simplistic sum. That’s where the PIE comes in. The basic idea is that you add the size of a bunch of sets, and then you subtract off the sizes of all the pairwise intersections. Then you add on the sizes of all the triple set intersections, and so on. If you keep going, you get the answer exactly. If you stop along the way you’ll have an upper or lower bound.

So I figured I could add the size of all the single-letter searches and then adjust that downwards using some simple estimates of letter co-occurrence.

That would definitely work.

But then the theory ran full into the reality of Twitter.

To begin with, Twitter gives zero results if you search for S or T. I have no idea why. It gives a result for all other (English) letters. My only theory was that Twitter had anticipated my effort and the missing S and T results were their way of saying Stop That!

Anyway, I put the values for the 24 letters that do work into a Python program and summed them:

count = dict(a = 108938,

b = 12636,

c = 13165,

d = 21516,

e = 14070,

f = 5294,

g = 8425,

h = 7108,

i = 160592,

j = 9226,

k = 12524,

l = 8112,

m = 51721,

n = 11019,

o = 9840,

p = 8139,

q = 1938,

r = 10993,

s = 0,

t = 0,

u = 8997,

v = 4342,

w = 6834,

x = 8829,

y = 8428,

z = 3245)

upperBoundOnUsers = sum(count.values())

print 'Upper bound on number of users:', upperBoundOnUsers

The total was 515,931.

Remember that that’s a big over-estimate due to duplicate counting.

And unless I really do live in a tech bubble, I think that number is way too small – even without adjusting it using the PIE.

(If we were going to adjust it, we could try to estimate how often pairs of letters co-occur in Twitter user names. That would be difficult as user names are not like normal words. But we could try.)

Looking at the letter frequencies, I found them really strange. I wrote a tiny bit more code, using the English letter frequencies as given on Wikipedia to estimate how many hits I’d have gotten back on a normal set of words. If we assume Twitter user names have an average length of 7, we can print the expected numbers versus the actual numbers like this:

# From http://en.wikipedia.org/wiki/Letter_frequencies

freq = dict(a = 0.08167,

b = 0.01492,

c = 0.02782,

d = 0.04253,

e = 0.12702,

f = 0.02228,

g = 0.02015,

h = 0.06094,

i = 0.06966,

j = 0.00153,

k = 0.00772,

l = 0.04025,

m = 0.02406,

n = 0.06749,

o = 0.07507,

p = 0.01929,

q = 0.00095,

r = 0.05987,

s = 0.06327,

t = 0.09056,

u = 0.02758,

v = 0.00978,

w = 0.02360,

x = 0.00150,

y = 0.01974,

z = 0.00074)

estimatedUserNameLen = 7

for L in sorted(count.keys()):

probNotLetter = 1.0 - freq[L]

probOneOrMore = 1.0 - probNotLetter ** estimatedUserNameLen

expected = int(upperBoundOnUsers * probOneOrMore)

print "%s: expected %6d, saw %6d." % (L, expected, count[L])

Which results in:

a: expected 231757, saw 108938. b: expected 51531, saw 12636. c: expected 92465, saw 13165. d: expected 135331, saw 21516. e: expected 316578, saw 14070. f: expected 75281, saw 5294. g: expected 68517, saw 8425. h: expected 183696, saw 7108. i: expected 204699, saw 160592. j: expected 5500, saw 9226. k: expected 27243, saw 12524. l: expected 128942, saw 8112. m: expected 80866, saw 51721. n: expected 199582, saw 11019. o: expected 217149, saw 9840. p: expected 65761, saw 8139. q: expected 3421, saw 1938. r: expected 181037, saw 10993. s: expected 189423, saw 0. t: expected 250464, saw 0. u: expected 91732, saw 8997. v: expected 34301, saw 4342. w: expected 79429, saw 6834. x: expected 5392, saw 8829. y: expected 67205, saw 8428. z: expected 2666, saw 3245.

You can see there are wild differences here.

While it’s clearly not right to be multiplying the probability of one or more of each letter appearing in a name by the 515,931 figure (because that’s a major over-estimate), you might hope that the results would be more consistent and tell you how much of an over-estimate it was. But the results are all over the place.

I briefly considered writing some code to scrape the search results and calculate the co-occurrence frequencies (and the actual set of letters in user names). Then I noticed that the results don’t always add up. E.g., search for C and you’re told there are 13,190 results. But the results come 19 at a time and there are 660 pages of results (and 19 * 660 = 12,540, which is not 13,190).

At that point I decided not to trust Twitter’s results and to call it quits.

A promising direction (and blog post) had fizzled out. I was reminded of trying to use AltaVista to compute co-citation distances between web pages back in 1996. AltaVista was highly variable in its search results, which made it hard to do mathematics.

I’m blogging this as a way to stop thinking about this question and to see if someone else wants to push on it, or email me the answer. Doing the above only took about 10-15 mins. Blogging it took at least a couple of hours :-(

Finally, in case it’s not clear there are lots of assumptions in what I did. Some of them:

- We’re not considering non-English letters (or things like underscores, which are common) in user names.

- The mean length of Twitter user names is probably not 7.

- Twitter search returns user names that don’t contain the searched-for letter (instead, the letter appears in the user’s name, not the username).

You can follow any responses to this entry through the RSS 2.0 feed. Both comments and pings are currently closed.

November 14th, 2009 at 4:07 am

It is very interesting indeed

regards

ashley beos

______________________________________________